Blog

Creating a GUI for the Kinect v 2

August 2nd, 2016 by Karen Category: Library Makerspace

In the first semester this year the Curtin Makerspace supported an exciting project to create a Graphical User Interface which would make it easy for non-programmers to use the Kinect camera to interact with a range of 3D environments.

The project was conducted under the auspices of Curtin’s Department of Computing as part of the “Software Engineering Project”, a unit in which students work with industry partners and/or Curtin researchers to develop custom software.

In early 2016 Erik Champion (Professor of Cultural Visualisation at the School of Media, Culture and Creative Arts (MCCA) and myself (Dr Karen Miller, Curtin Library and MCCA) submitted a proposal to create the GUI.

The intention was to build on the project completed by Jiayi Zhu through the HIVE’s Summer Intern program. Jiayi developed a program for the Kinect which detects the gestures made by a player and formulates a corresponding keyboard stroke to these motions, enabling players to fully interact with a virtual Minecraft world through their movement. We wanted to develop this project further by enabling the Kinect to be used by non-programmers to interact with different 3D virtual worlds.

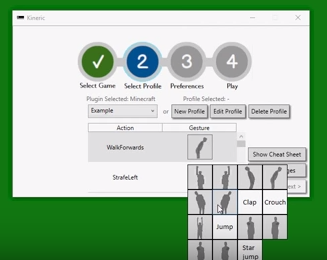

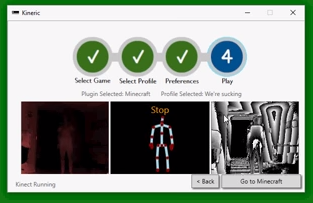

Our proposal was to develop a graphic user interface to connect components of a Kinect camera- tracked movements library to various interaction modes. We wanted a ‘visual library’ of gestures so users could personally configure different combinations of commands and gestures. The system would be able to be modified for each application, and also support a player profile feature to allow the system to be configured differently for each person. We wanted visual feedback from the system to indicate when gestures were valid, when a player was not being tracked, and when errors were encountered (with guides to enable recovery). We also wanted the user’s skeleton to show in a miniscreen which would tell the user what the Kinect was picking up. In short, it was to be a portable system which was easy to set up in different locations, where participants could simply plug the Kinect in, choose the gestures they wanted to use, and begin interacting with the game/world.

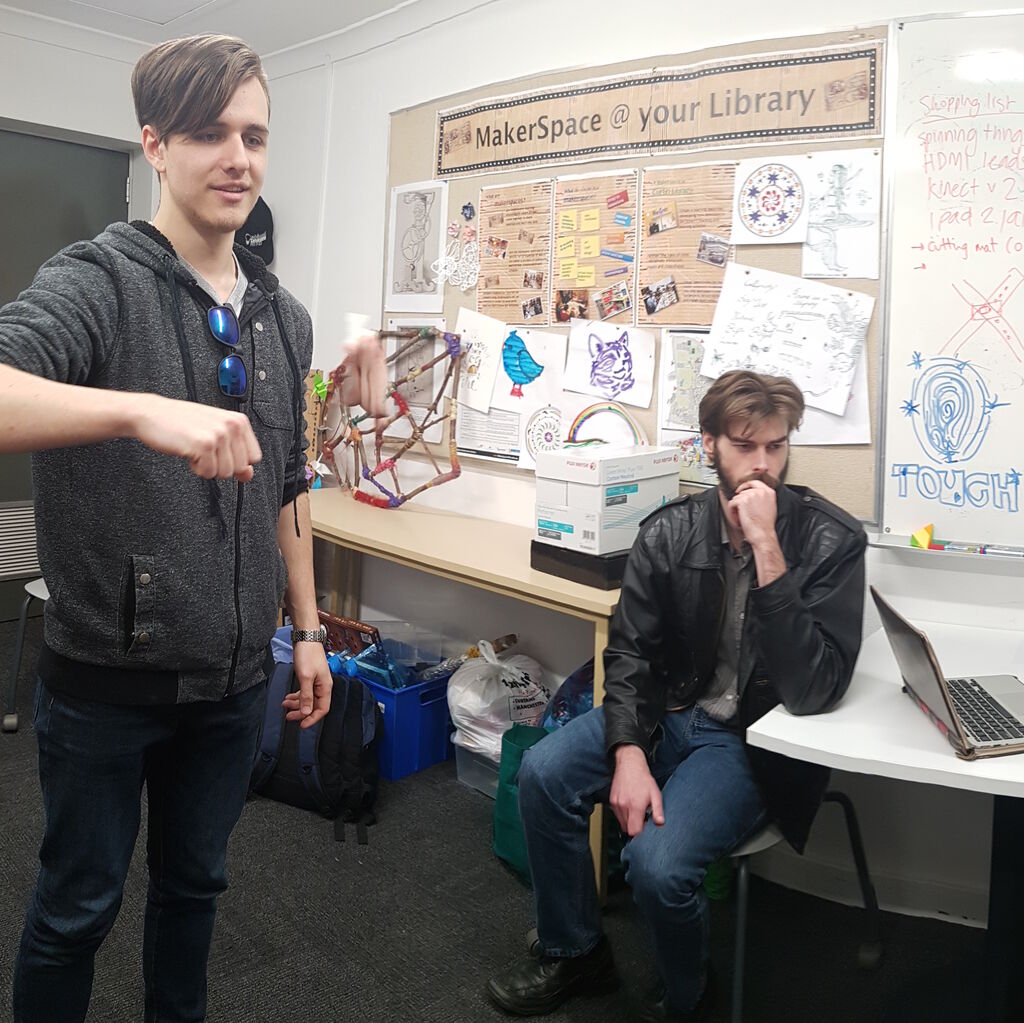

We were very fortunate to have computer science students Campbell Pedersen, Sebastian Robbins and Neil Dempsey to work on this project over the past semester. Throughout the semester Erik and I met with them fortnightly to discuss progress, provide feedback and talk through issues and ideas. By the end of the semester they had produced a prototype of a working user interface (written in C#), as demonstrated in this video. This semester the team (minus Campbell whose course structure means he is not able to work on it this semester) will continue to improve the product.

For me, personally, it’s been a great experience to work with programming students and gain an insight into the complex process involved in creating a software application, including formulating technical specifications, requirements and user testing. I’m excited by the potential of this type of software to bridge real and virtual worlds by providing a physically interactive and fun experience while engaging with 3D environments.

Once the project is complete, the next step will be to develop ways it can be used in public spaces, such as the Curtin Library.

Campbell and Sebastian testing in the makerspace

Campbell and Neil

Selecting the gestures

Ready to play

It works!